Configure Moveworks Toxcity Filter

Overview

The Moveworks AI Assistant is, general-purpose employee service tool, it is instructed to not engage on sensitive topics. In these scenarios, the expected behavior is for the bot to decline the request. Using machine learning, all incoming requests are analyzed for potential toxic or non-work appropriate content. Moveworks supplements GPT’s own toxicity check with a fine-tuned large language model (FLAN-T5) that assesses appropriateness for work environments, and uses a policy that guides the Moveworks Assistant to not engage with the user if such a request is detected. In cases where the toxicity filter is triggered, the user will receive a message similar to I'm unable to assist with that request, and will not receive an acknowledgement of the issue.

Examples: Language that is hateful, abusive, derogatory or offensive.

In some cases, the Moveworks baseline toxicity default filter can be too broad, especially in cases for HR related inquiries. For example, a customer may have relevant knowledge on workplace harassment (such as internal policies or reporting procedures) that should be surfaced to users.

How this Feature Works

To handle these cases, Moveworks allows admins to define exceptions to the default restricted topics list, ensuring users can access appropriate knowledge while maintaining the AI Assistant's strong, built-in safeguards against toxic or offensive content.

For scenarios where customer content is currently being blocked, you can now provide a list of topics the bot is explicitly allowed to engage on. This inclusion enables the bot to serve the request and surface the relevant knowledge. The configured topics will be applied to every future interaction with the Moveworks AI Assistant.

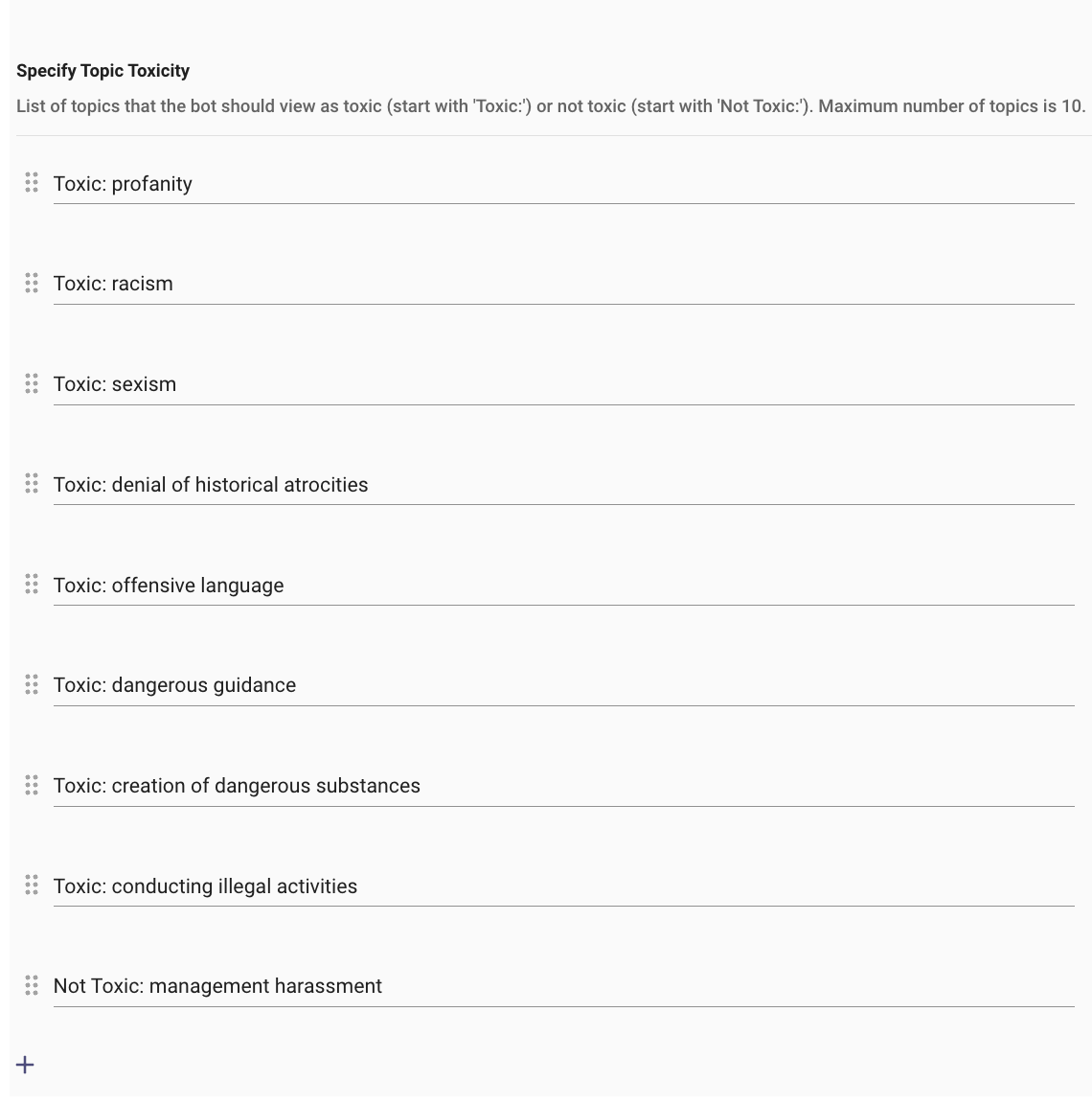

Note: By default, the out-of-the-box toxicity topics are pre-populated for every organization. Moveworks currently supports up to 10 custom topics, each of which can be designated as either toxic (blocked) or not toxic (allowed) with a max count of 100 characters.

Prerequisites

Before configuring custom exceptions for toxicity, please gather the following:

- 2-3 proposed topics that broadly cover or describe the utterances you listed in item 1 (e.g., "Workplace Policy," "HR Resources").

- A list of specific utterances per topic (e.g., "how do I report harassment?") that are currently being blocked but should be allowed to trigger knowledge or other resources.

Configuration Steps

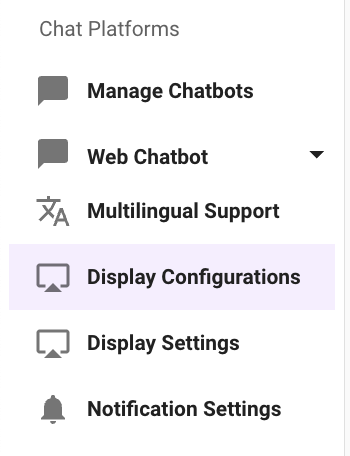

Step 1: Navigate to Display Configurations

- Navigate to Chat Platforms -> Display Configurations

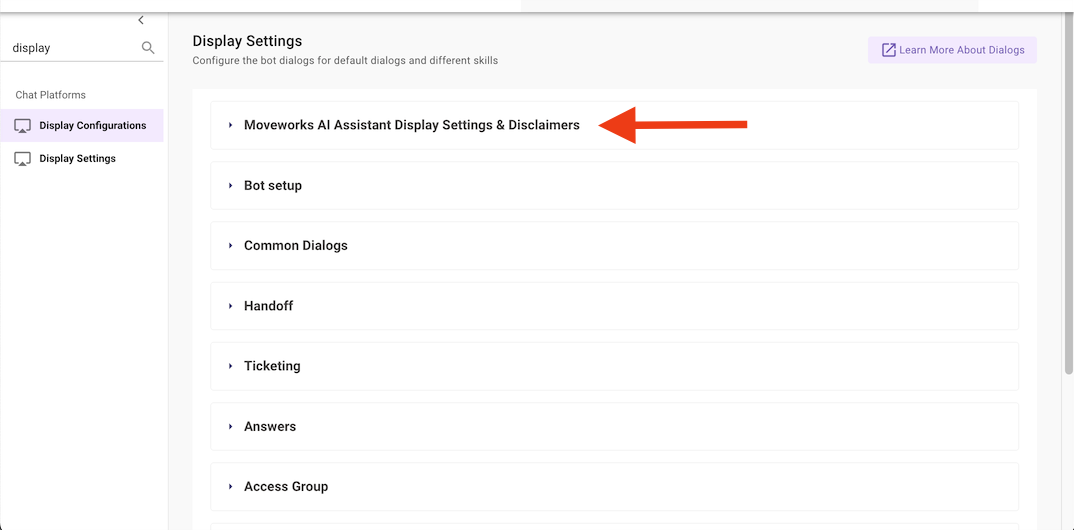

Step 2: Navigate to Moveworks AI Assistant Display Settings & Disclaimers toggle

- Scroll down to the Moveworks AI Assistant Display Settings & Disclaimers toggle and click into this module.

Step 3: Navigate to the Toxicity section

- Scroll down to the Specific Topic Toxicity section.

The Toxic: and Not Toxic: verbiage is needed at the beginning of the prompt. This will be directly used by the model to determine if the user conversation is toxic or not.

- Click the

+to add a new topic and example utterance or update an existing prompt.

FAQs

- Q: Does this override underlying toxicity protections in GPT models?

A: This is a Moveworks specific toxicity check only. There is also the OpenAI or Azure toxicity check that gets applied, which Moveworks does not have control over.

Updated 9 days ago