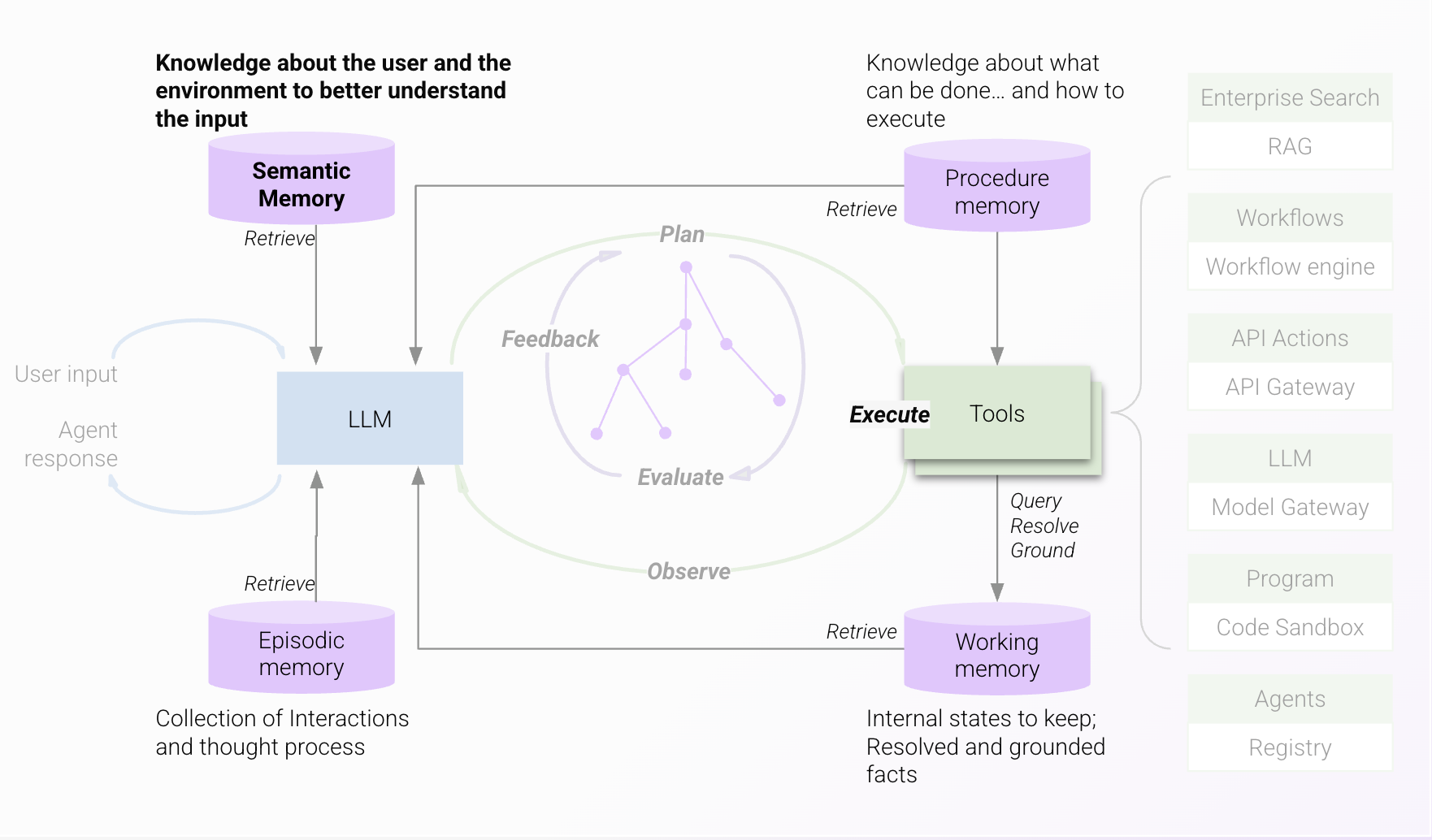

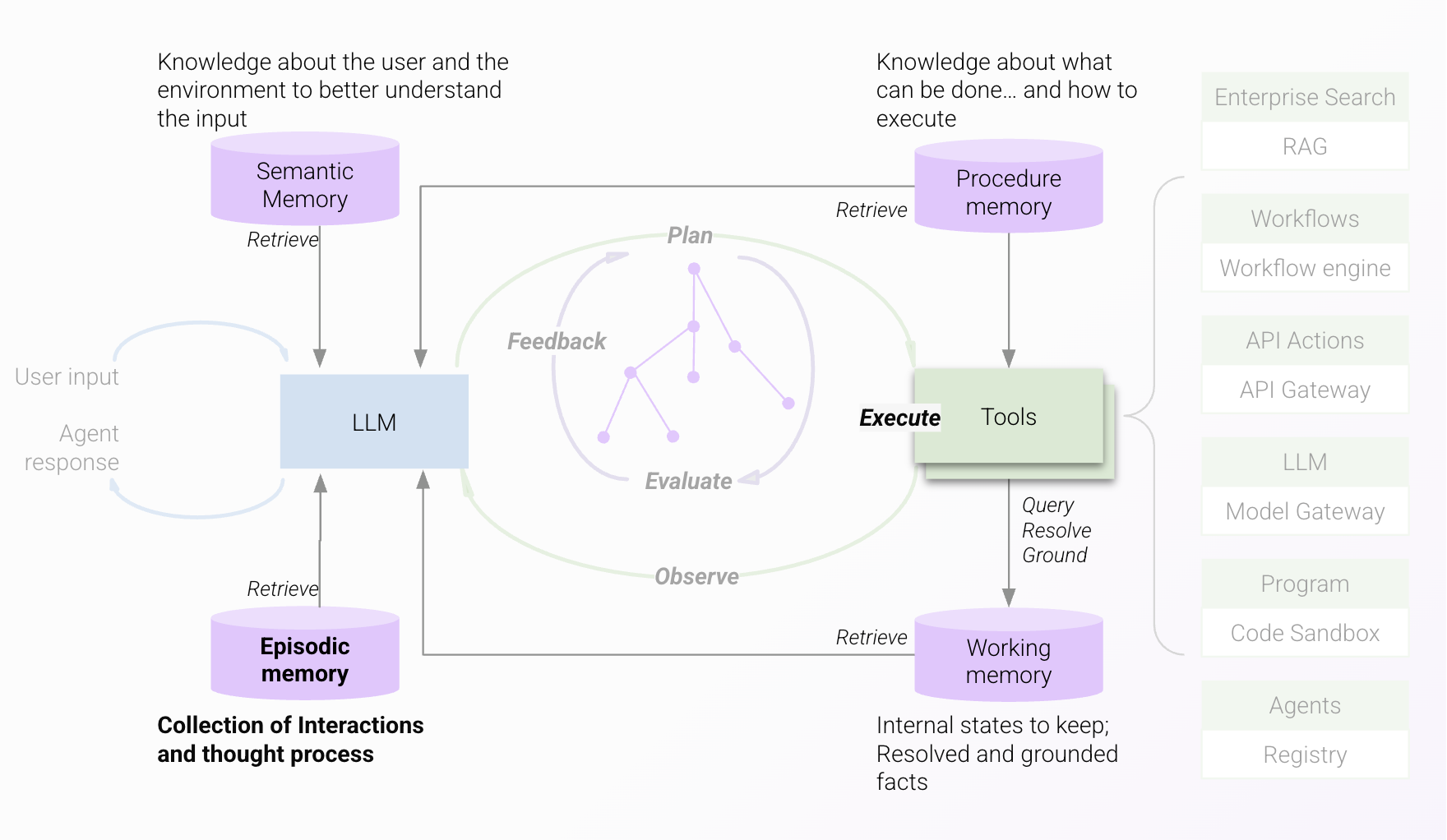

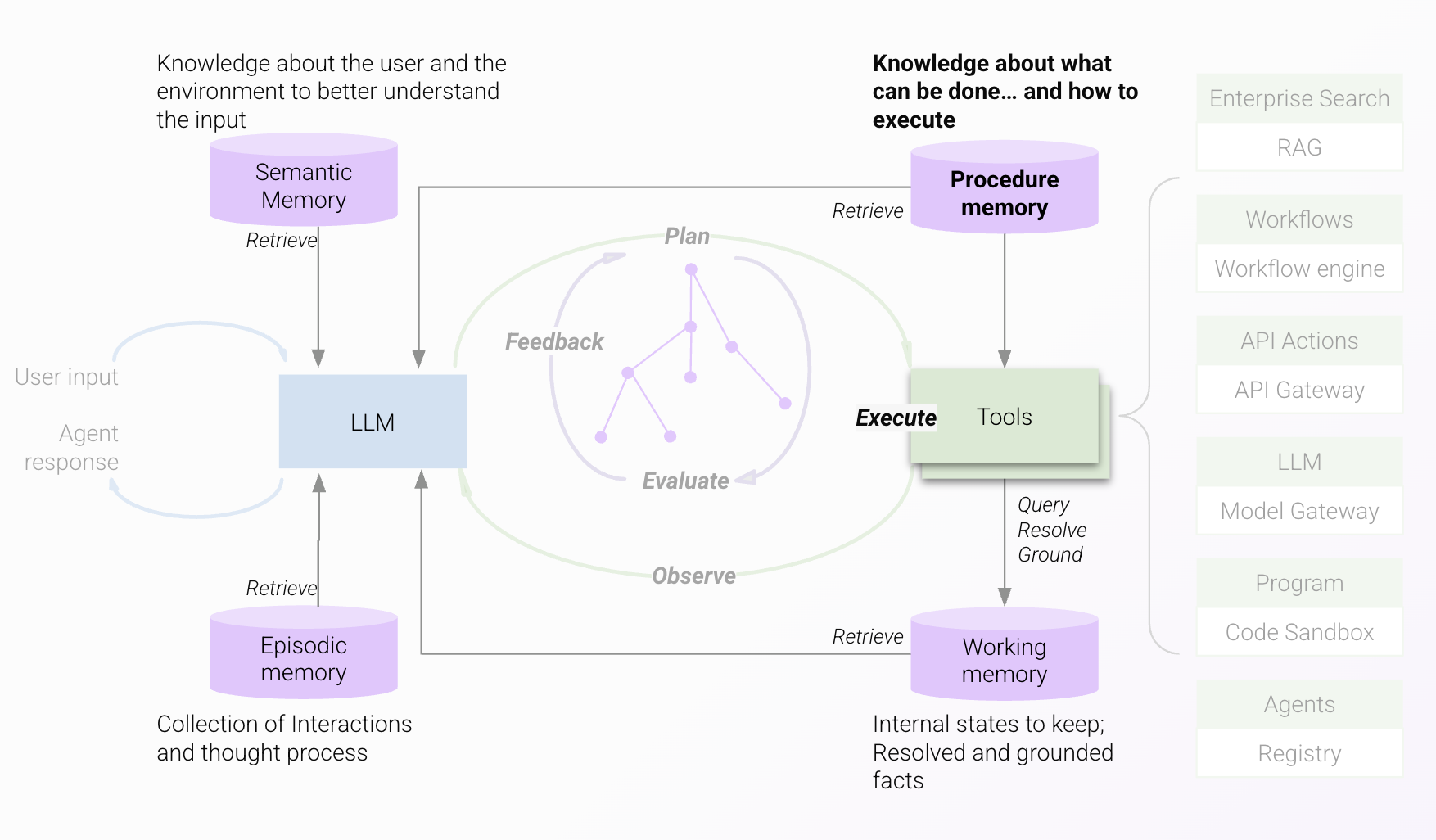

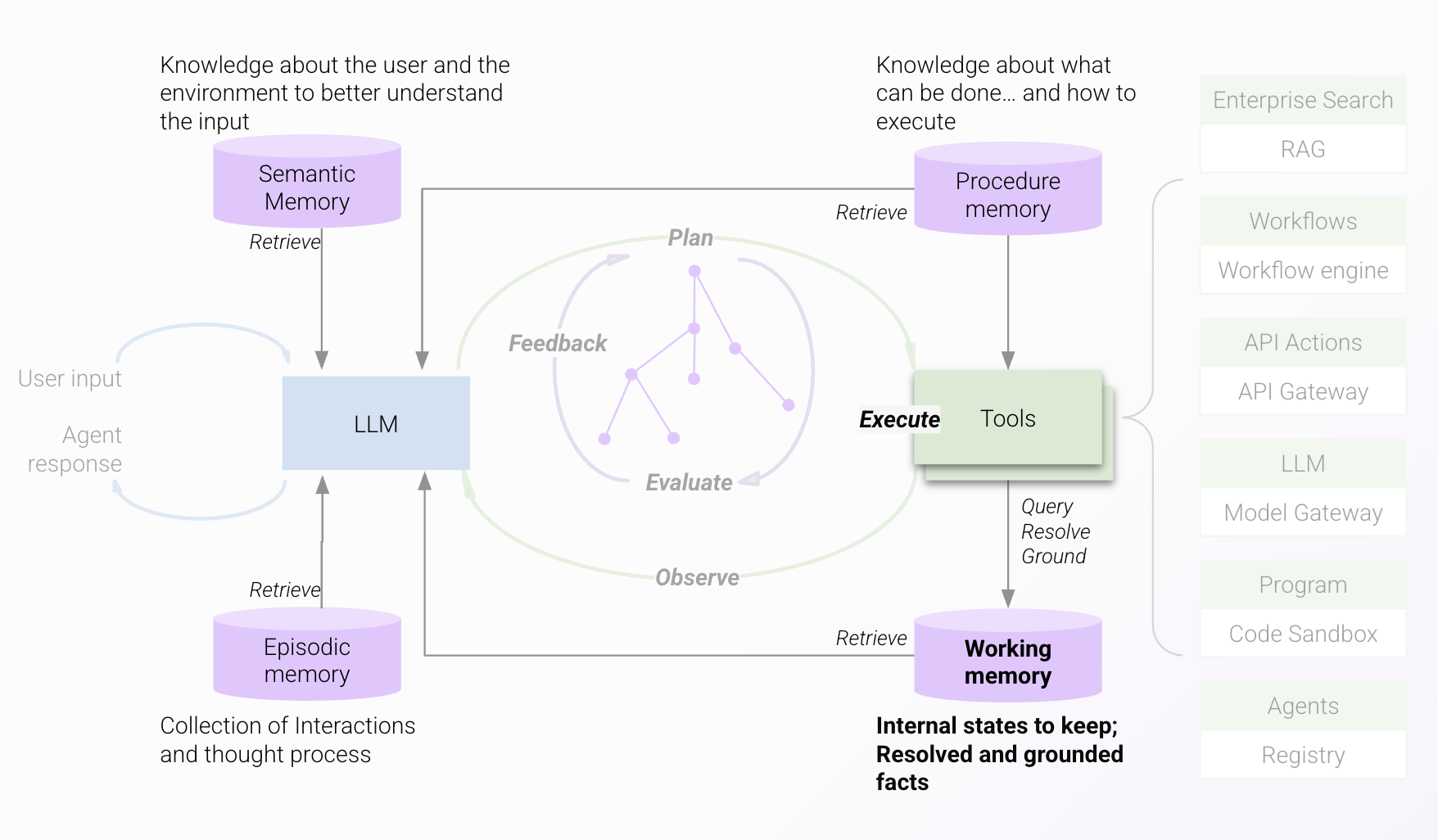

Memory Constructs

The Moveworks AI Assistant leverages four different kinds of memory to ensure that it has the most complete knowledge and context available to it at every stage of addressing the user's request. Broadly speaking, these four types are used to store diverse data which provide a complete picture of the current state and possible future states given the specifics of the environment and conversation in progress:

- Semantic memory - to understand the terms used by user

- Episodic memory - to recall the past conversations with the user

- Procedure memory - to assess what options are available in the environment

- Working memory - to keep track of what is in flight, and to ground the responses with accurate references

Semantic Memory

Knowledge of the content, entities and terminology used by the organization. Needed to understand the semantics of the conversation with the user.

Episodic Memory

Awareness of the current context in the conversation with the user, i.e. what questions and answers have been exchanged and what decisions have been made already - Needed to have a true interactive conversation with the user whereevery response is unique and tailored to the context

Procedure Memory

Knowledge of the tasks that can be performed in the environment for the user and what business processes or rules should be followed - Needed to select theright tools for the request and how to apply them

Working Memory

Awareness of what operations and processes are in progress, and which stage of completion they are at - Needed to make sure that multi-step synchronous and asynchronous processes aretracked and driven to completion. Also important to ensure that the provided responses are anchored inreferences so that the user can verify facts as they wish.

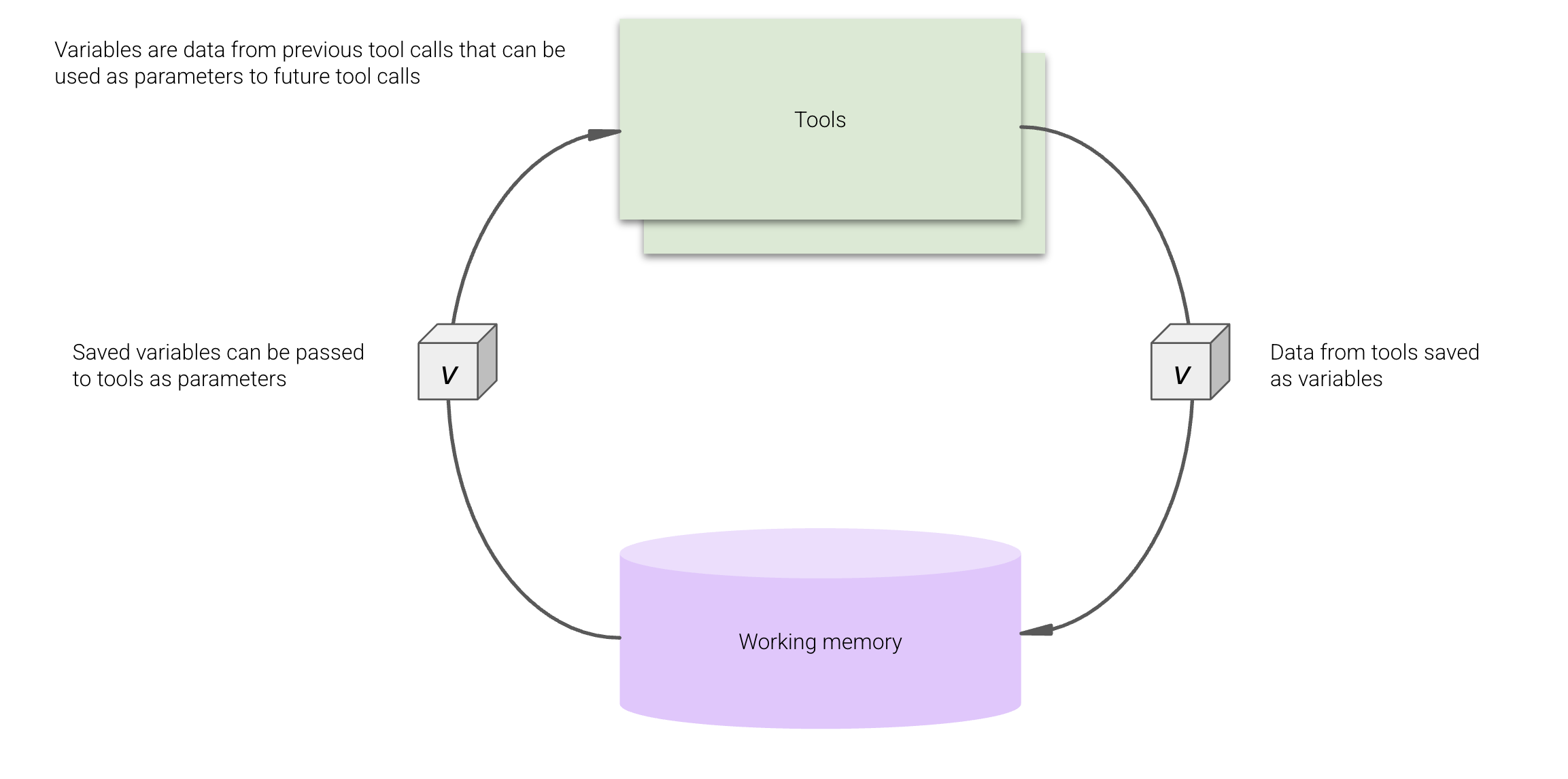

Variable Tracking

To improve the reliability of tool calling for enterprise systems, our working memory has a variable tracking framework that guarantees the integrity of your business objects. This delivers two core benefits:

- LLMs can't accidentally hallucinate or mix-and-match IDs because the variable tracking system keeps them grounded.

- The reasoning engine can handle significantly more data. Operations are not limited by the size of an LLM's context window – so your AI Assistant can juggle thousands of records & perform calculations with powerful plugins like our built-in code interpreter.

Read more about the architecture here.

Updated 5 months ago